Optimizing Bike Sharing at SURP

Optimizing Bike Sharing at SURP

Optimizing Bike Sharing at SURP

The SURP Poster Session this past July was a great opportunity for Computer Science students to show off what they had accomplished over the summer. From anomaly detection programs to smart home technology, there was a wide variety of research projects to be seen at the event. One such project, presented by Brian Campana, aimed to optimize city bike sharing solutions through deep reinforcement learning. Brian had a lot to share about his research and findings on this topic, so let’s dive right into it.

Due to concerns about traffic and pollution, more and more people are turning to alternative modes of transportation, namely bicycles. This has led to an ever-growing demand for rental bikes in major US cities like NYC. Bike rental stations often find themselves off balance as a result. They don’t have enough bikes to accommodate the local demand. This issue is only growing as rental bikes become more popular, so naturally there has been research into how to mitigate this problem. This is where Brian’s research project comes into play.

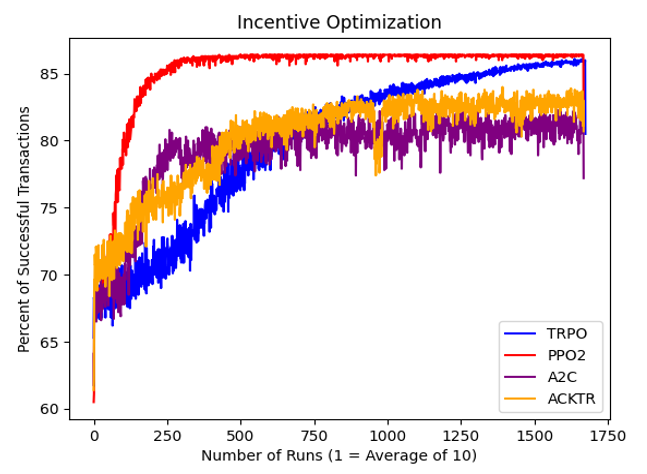

Brian has been conducting research on one potential solution to this issue, that being to incentivize bike users to transport their bikes to rental stations that need them. The way this solution was tested was through a simulation. Essentially, the idea is that a computer program simulates the activity of bike users and bike rental stations. From there, the program is trained to properly incentivize the simulated users to help distribute bikes to different rental stations. The program is trained through a process called deep reinforcement learning (DRL for short). A few different DRL training methods were tested in order to see which ones were the most effective, and there was also a test with no incentive for comparison’s sake.

|

After testing, the results were analyzed. The no-incentive run came out with a success rate of about 44%. Meanwhile, the most effective DRL method nearly doubled that with a success rate of about 86%. The method in question was called Proximal Policy Optimization (PPO2 for short). The results of all the different DRL methods can be seen in the graph to the left. All methods showed substantial improvement over the test with no incentive, but PPO2 was the most successful of all. |

With all that said, there are still many more avenues to explore in this line of research. Brian’s project has proven the usefulness of incentives, but there are still more variables that need to be examined. For example, the environment used for the simulation is currently one-dimensional. It does not represent the complexity of an actual city, nor does it account for the distance between locations. For future testing, a two-dimensional testing environment would be necessary. Ideally, these future, two-dimensional tests could also incorporate data from the Citi Bike rental system in NYC. That way, the algorithm could be tested on a real city.

That covers the broad strokes of Brian’s research project. While the research does have more variables to be tested, it also demonstrates the potential power incentives have when it comes to optimizing bike rental systems in large cities. As stated before, bike rental is currently on the rise, so having a solution for optimizing it is becoming increasingly more urgent. Research like this is paving the way for transportation in the future. Be sure to check in again soon to see what other scientific contributions are being made by Rowan’s Computer Science students.

Written by Cole Goetz | Posted 2021.10.22